AI Confidence Probabilities

Something that I’m going to be delivering soon is a demonstration of AI tools to my staff. We’re looking at how we can use these tools to help bolster our mission, especially in the current environment and federal funding shifts.

Something that I’m going to be delivering soon is a demonstration of AI tools to my staff. We’re looking at how we can use these tools to help bolster our mission, especially in the current environment and federal funding shifts.

One of the things that I’ve been thinking of lately is how we can get the most of these AI tools. I am a big believer in using an interrogative back-and-forth with the system to help give the tool the best possible chance of success. It is a gross oversimplification of the matter, but AI tools can be thought of, in very simplistic and non-technical terms, a fancy auto-complete system. Just as we can’t read minds, our computer overlords cannot read minds either.

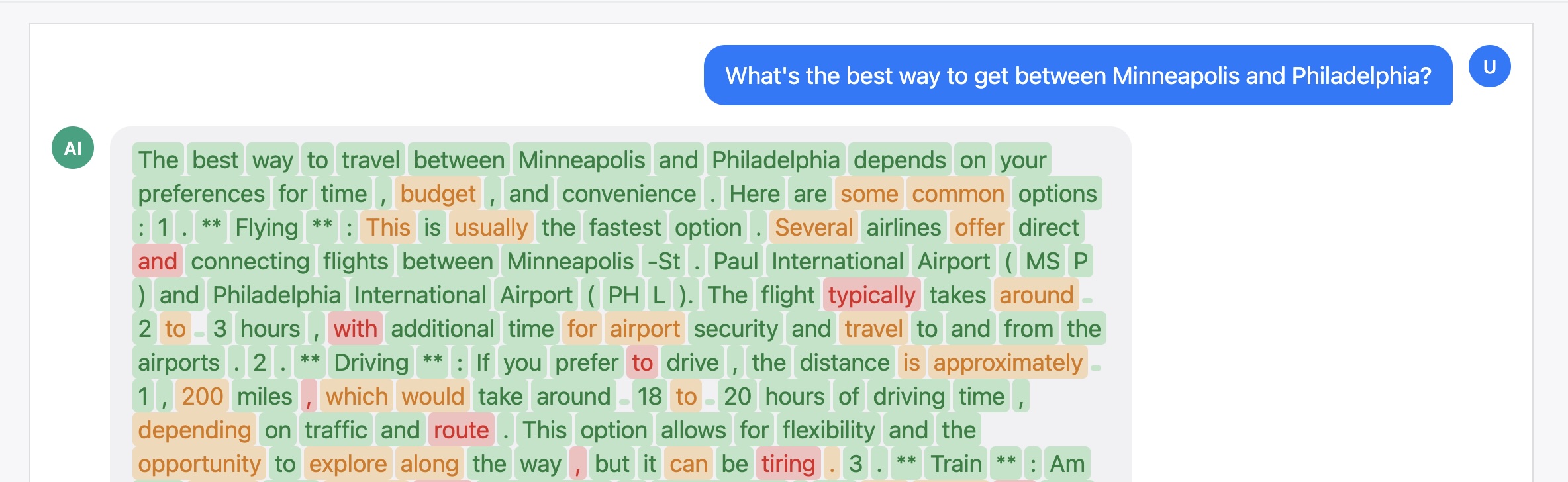

As part of the demo, I’m going to have the AI system think outward. AI systems work in what’s called tokens, which is the basic unit of text processing for AI models. When generating responses, the system determines confidence levels for response tokens, which are expressed as logprobs (logarithmic probabilities). That way, the system can make sure it generates the most useful information.

To show this demonstration, I’ve used Claude and GitHub Copilot to help me come up with a demo to show confidence in what an AI model is saying. It’s important to note that AI confidence is just how confident it is: it has nothing to do with actual facts or real-world confidence. It uses the openai/gpt-4o-mini on OpenRouter. You’ll need an OpenRouter API key.

Go find it on my GitHub at github.com/edwardjensen/ai-confidence-demo and let me know what you think!